Software & Hardware Systems

eTube

May - Sept 2022

Live improvisation between Tommy Davis and Spire Muse improvising agents. Spire Muse is a co-creative musical agent that uses corpus recordings and machine listening to interact and improvise with human musicians. Using recordings preloaded into its memory it reorganizes fragments of these recordings into improvised phrases based on the musical context and in reaction to the human performer.

Tommy Davis, eTube

Spire Muse, tube

This recording was created as part of a CIRMMT Inter-centre Research Exchange at the Metacreation Lab for Creative AI at Simon Fraser University Surrey Campus with financial support from the Centre for Interdisciplinary Research in Music Media and Technology (CIRMMT) at McGill University.

Show project media

Calliope

2022 - ongoing

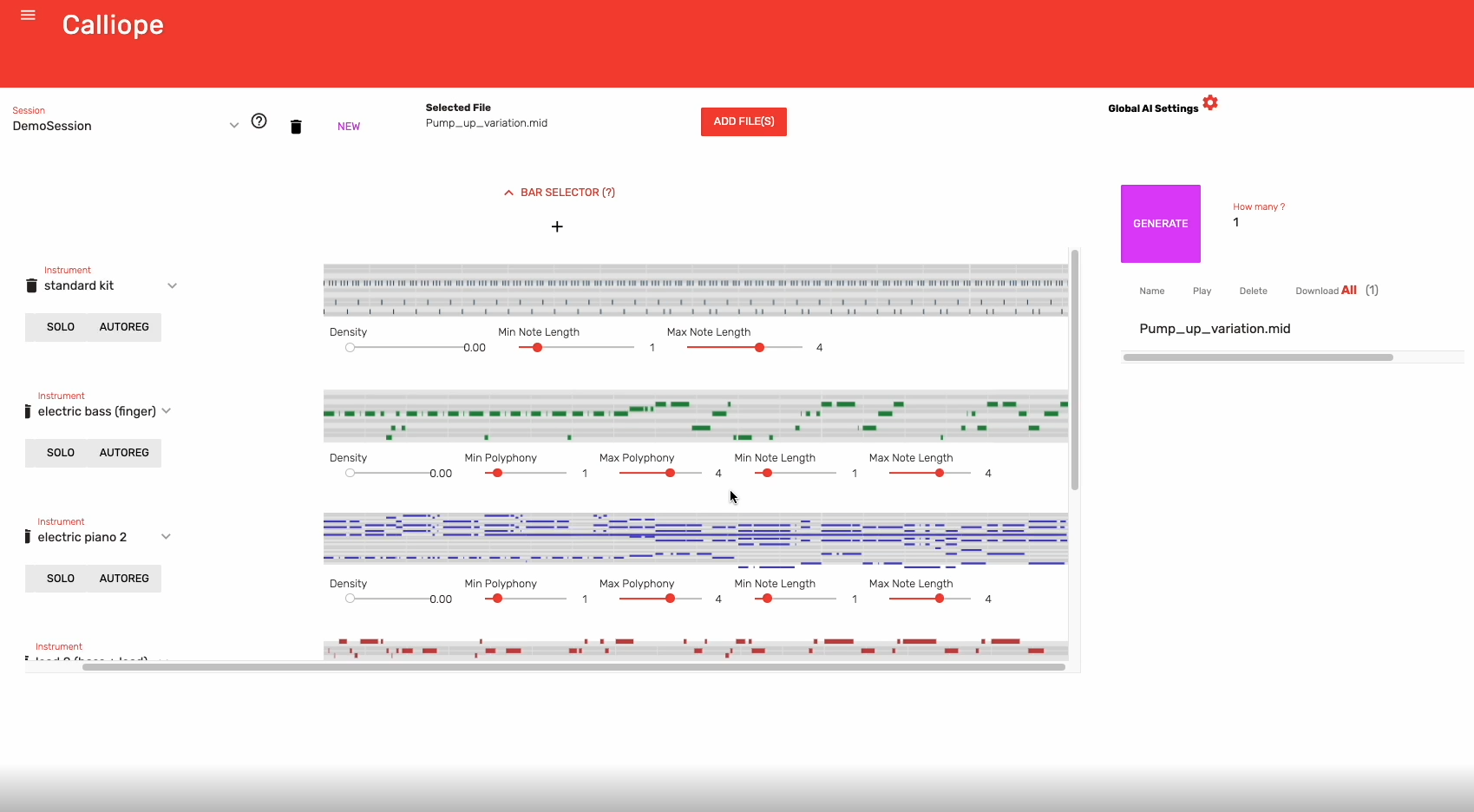

Calliope is an interactive environment using MMM for symbolic music generation in computer-assisted composition. Calliope is an evolution born out of the (now archived) Apollo project.

The user can generate or re-generate symbolic music using a seed MIDI file via a practical easy-to-use graphical user interface (GUI). The system can interface with your favorite DAW (Digital Audio Workstation) such as Ableton Live via MIDI streaming.

Show project media

PreGLAM-MMM

2022 - ongoing

Multi-Track Music Machine (MMM) is a generative music creation system based on Transformer architecture, developed by Jeff Enns and Philippe Pasquier. We apply MMM to create an AI-generated score for a video game, which adapts to the gameplay based on modeled emotional perception. We manually compose a score using the IsoVAT Composition Guide, which we use as input for MMM. MMM provides AI-generated variations on our score, expanding our score from 27 composed clips to ~14 trillion unique musical arrangements. These variations are controlled during gameplay by the PreGLAM affect model. This creates an AI-generated score that responds in real-time to match the audience’s emotional perception of the gameplay.

Show project media

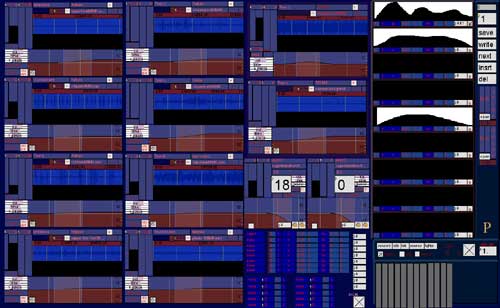

MMM4Live

2019 - 2020

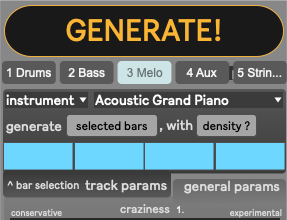

MMM4Live is a Multi-Track MIDI Music Generation Machine for Ableton Live. The MMM4Live plugin is based on the MMM project, which is a generative music creation system based on Transformer architecture, developed by Jeff Enns and Philippe Pasquier. The system generates multi-track music by providing users with a fine degree of control of iterative resampling driven by machine learning.

Based on an auto-regressive model, the system is capable of generating music from scratch using a wide range of preset instruments. Inputs from one track can condition the generation of new tracks, resampling MIDI input from either the user or the system into further layers of musical compositions.

Show project media

MAVi

2016 - 2019

Movement data is fascinating to data artists for its richness of expression and great potential. We explore this kind of data, for the sake of generating video sequences, and create MAVi, a new tool for video creation that allow movement data visualization, real-time manipulation, and recording.

Show project media

DJ-MVP

2016 - ongoing

The automatic music video producer (DJ-MVP) is a computationally generative audio-visual system that renders music videos. The system uses a given target song and generates audio-video mashups for it.

Show project media

MoDa - The Open Source Movement Database

2015 - ongoing

This Movement Database is part of the M+M: Movement + Meaning Middleware project, which is intended to enable researchers to construct meaningful semantic models for movement that can interpret human movement data, construct machine-learning models for movement recognition and movement analytics, represent semantic properties of movement behaviours for virtual avatars in online games and online performance, and map movement data as a controller for online navigation, collaboration, distributed performance and as input to search engines that can recognize and tag existing movement databases.

Show project media

Mova

2014 - 2019

Mova is an interactive movement tool that uses aesthetic interaction to interpret and view movement data. This publically accessible open source web based viewer links with our movement database allowing interpretation, evaluation and analysis of multi-modal movement data. Mova integrates an extensible library of feature extraction methods with a visualization engine. Using an open-source web-based environment users can load movement data, select their desired body parts, and choose the features to be extracted and visualized. Mova can be used to observe the movements of performers, validate and compare feature extraction methods, or develop new feature extraction methods and visualizations.

Show project media

Contact Sensor

2002 - 2006

This is new type of sensor based on the human body electrical conductivity that has been developed in collaboration with the contemporary dance company Le Corps Indice. A first prototype has been developed in the context of a course on dance and new technologies team-taugh with a choreographer at the Ateliers de Danse Moderne Inc. (ADMI, Montréal) in 2004. A more sophisticated version was elaborated during artistic residencies at la Cité des Arts (TOHU, Montréal) and LANTISS in 2005.

Show project media

Improvising Automata

2001 - 2006

Initially developed for the Machines12 event, this improvising automata is an autonomous artificial agent that is able to improvise sound-music with one or more human musicians. The agent is capable of hearing and analysing an incoming audio signal (both in the temporal and spectral domains). The musical behavior of that proactive agent is controlled by a cellular automaton inspired from Von Neuman's work on abstract machine reproduction. Each cell contains a sound object that is diffused according to the activation level of the cell and updated according to what the agent is hearing.

Show project media

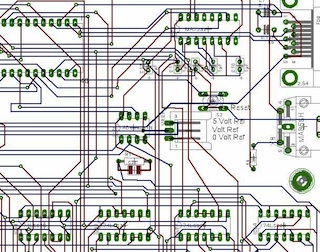

Level Control System

2001 - 2005

LCS is a hardware+software level control based spatialization device. I have been working with LANTISS (Laboratory for New Technologies for Image, Scene and Sound, Laval University, Quebec) on their 24 output channels Matrix3 system during an exploratory residency with David Michaud and the electroacoustic composer Christian Calon. This system was also used for P's Faisceau d'épingles de verre project (see the multi-disciplinary art section below

Show project media

Z

1998 - 2005

This software has been developed by Pascal Balthazar and the Computer Music Group of the GMEA (Albi's Electro-acoustic Music Group, France). It is a sound processing and diffusion platform adapted to scenic arts. Its modular structure, combined with some powerful addressing and control facilities, enables full exploitation of new scenic technologies (sensors, controllable scenic hardware, ... ). I have been adapting, testing and refining Z for several projects including solo music performances, Faisceau d'épingles de verre and work with Le Corps Indice.

Show project media